11 | 2023

SUPERPAINT

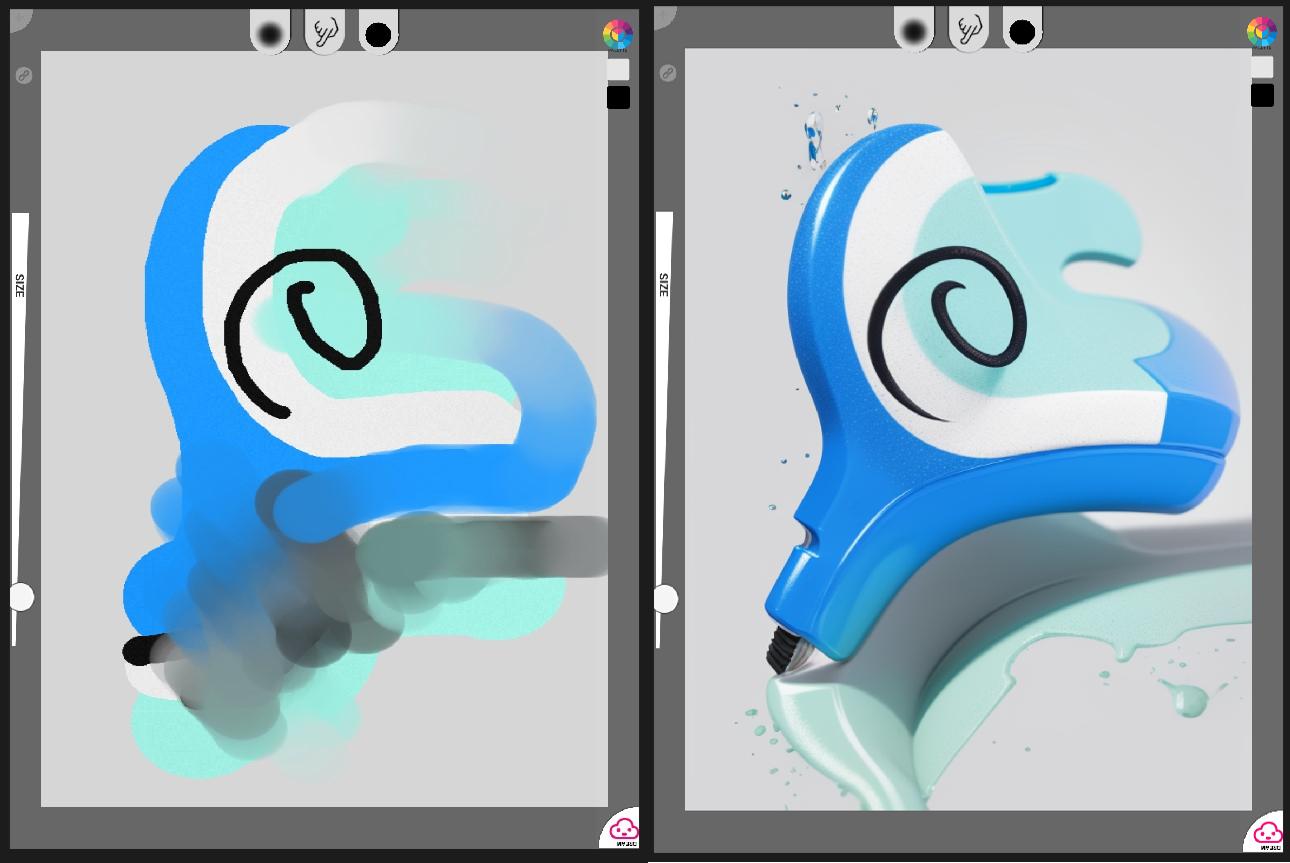

SUPERPAINT ist ein experimenteller Prototyp zur Erforschung des co-kreativen Potenzials zwischen Mensch und bildgenerativen Algorithmen. Die minimalistisch gehaltene Zeichenoberfläche ist mit einem Prompt-Interface ausgestattet, um mit einer bild genrativen KI zu kommunizieren.

Die Arbeit mit SUPERPAINT kann als vielschichtiger Prozess verstanden werden: Das Bild, das die Maschine nach der Verarbeitung von Zeichnung und Prompt zurückliefert, kann dann vom Menschen erneut „übermalt“ und „übergeprompt“ werden, um das Ergebnis weiterzuentwickeln.

SUPERPAINT ist zudem das Kernwerkzeug beim SUPERTOY BATTLE.

SUPERPAINT ist im Moment in einem frühen Entwicklungstadium – wird aber zu gegebenem Zeitpunkt quelloffen in Unity/C# veröffentlicht.

Benutzeroberfläche

SUPERPAINT – early interface in alpha state

Gallerie

Neotraditional Masks

This series of Superpaints follow the idea to create hybrid, traditional inspired shaman masks.

step by step drawing and generation

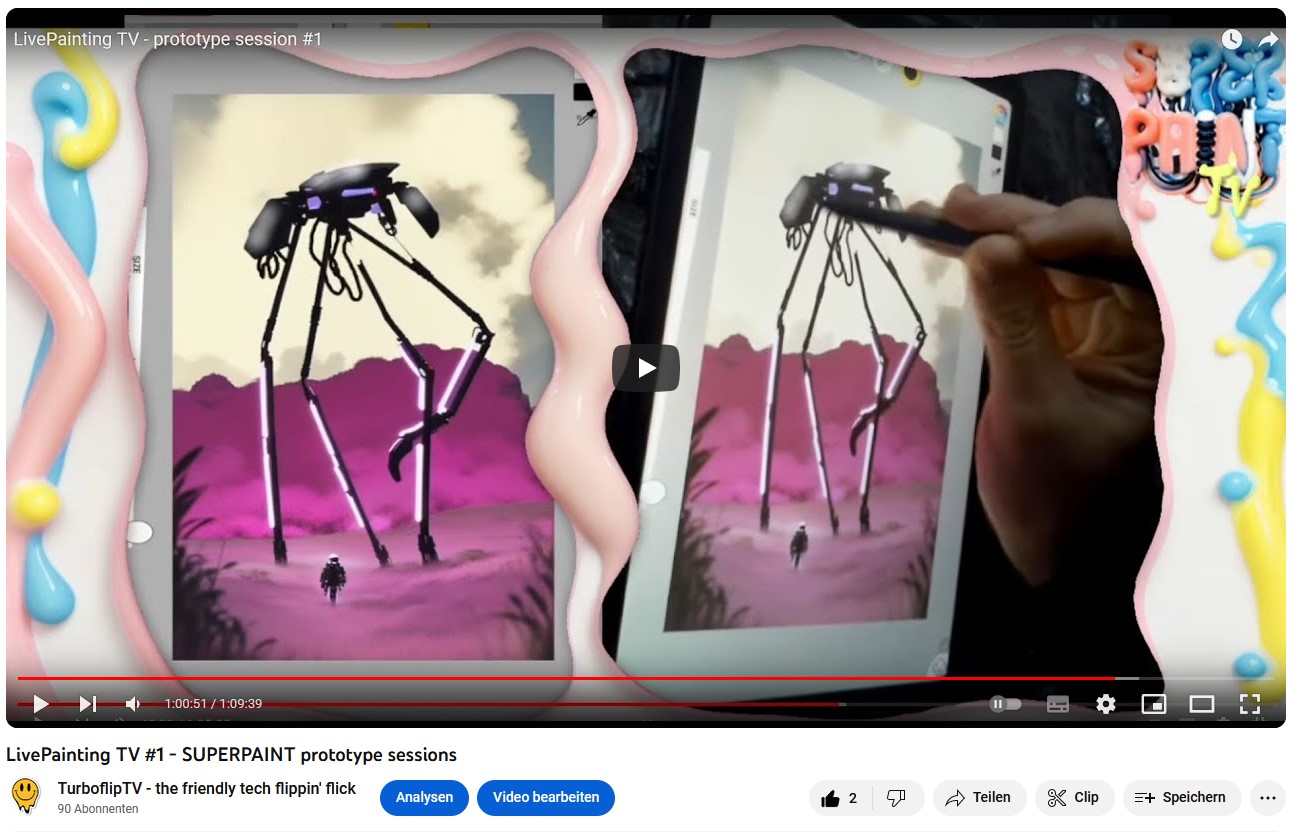

Livepainting Session #1

Live-Session mit TURBOFLIP’s brandneuem handgemachten cocreational KI Malwerkzeug – SUPERPAINT. Dieses experimentelle Werkzeug läuft lokal mit der Stable Diffusion/A1111 API – Sehen Sie, wohin es führt. Diese Session wird von Simon Oakey durchgeführt und von den Soundscapes von EROKIA begleitet – Props! – https://freesound.org/people/Erokia/sounds/620940/

inital painting in session #1

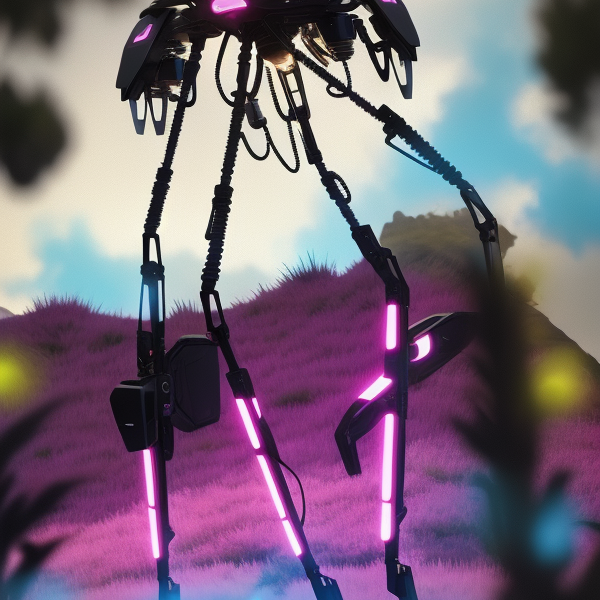

CYBERCROCHET II

Using SUPERPAINT is not limited to drawing or illustration styles. Even 3D render or vintage black and white photography is easy to do. This series continues my CYBERCROCHET series and explores speculative traditional sorbic inspired knitting and weaving outfits. With proper prompt and drawing guidance, we can create compositions way beyond the stereotypical expectations. All done locally with SD 1.5 / A1111 + cyberrealistic v3.3

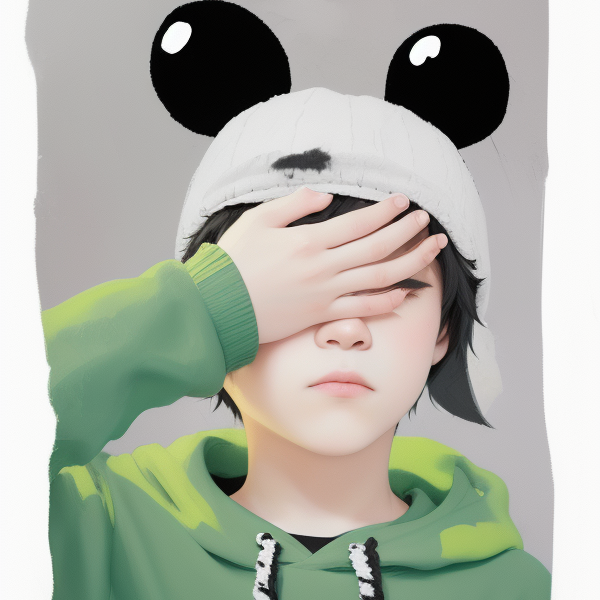

EDGYFY

With AI it is easy to trim images to any convincing direction. The real challenge is to find the right balance between „generative“ and „handmade“ – as we know, that human and machine in symbiosis go pretty well together. This series is a first attempt to dig into the glitches of this toolchain.

zuletzt geändert: 2024-03